UBC Open Robotics

The results for the Robocup@Home Education 2020 Online Competition are out! Check out our standing below.

Click here if you want to skip to my involvement in this team.

Overview

UBC Open Robotics is a student team comprised of 60 students split into three subteams - ArtBot, PianoBot, and Robocup@Home. I am a member of the software team in the RoboCup@Home subteam.

The objective of RoboCup@Home is to build a household assistant robot that can perform a variety of tasks, including carrying bags, introducing and seating guests at a party, answering a variety of trivia questions and more. Open Robotics is developing a robot to compete in the 2021 RoboCup@Home Education Challenge while in the meantime, our subteam will compete in the 2020 Competition using the Turtlebot 2 as our hardware platform.

The Challenge

The rules for the 2020 Challenge can be found here, but they boil down to three specific tasks:

- Carry My Luggage - Navigation task

- Find My Mates - Vision task

- Receptionist - Speech task

Carry My Luggage

Goal: The robot helps the operator to carry a bag to the car parked outside

Starting at a predifined location, the robot has to find the operator and pick up the bag the operator is pointing to. After picking up the bag, the robot needs to indicate that it is ready to follow and then it must follow the operator while facing 4 obstacles along the way (crowd, small object, difficult to see 3D object, small blocked area).

Find My Mates

Goal: The robot fetches the information of the party guests for the operator who knows only the names of the guests. Knowing only the operator, the robot must identify unknown people and meet those that are waving. Afterwards, it must remember the person and provide a unique description of that person, as well as that person’s location, to the operator.

Receptionist

Goal: The robot has to take two arriving guests to the living room, introducing them to each other, and offering the just-arrived guest an unoccupied place to sit. Knowing the host of the party, John, the robot must identify unknown guests, request their names and favourite drinks and then point to an empty seat where the guest can sit.

My Contributions

My main contributions have been in speech recognition and in handle segmentation, targeting task 3 and task 1 respectively, however I also worked on facial recognition earlier in the project.

Speech Recognition

You can find this repository here

Speech recognition is implemented using PocketSphinx which is based on CMUSphinx. Which offers two modes of operation - Keyword Spotting (KWS) and Language Model (LM).

KWS

Keyword spotting tries to detect specific keywords or phrases, without imposing any type of grammer rules ontop. Utilizing keyword spotting requires a .dic file and a .kwslist file.

The dictionary file is a basic text file that contains all the keywords and their phonetic pronunciation, for instance:

BACK B AE K

FORWARD F AO R W ER D

FULL F UH L

These files can be generated here .

The .kwslist file has each keyword and a certain threshold, more or less corresponding to the length of the word or phrase, as follows:

BACK /1e-9/

FORWARD /1e-25/

FULL SPEED /1e-20/

LM

Language model mode additionally imposes a grammer. To utilize this mode, .dic, .lm and .gram files are needed.

The dictionary file is the same as in KWS mode.

The .lm file can be generated, along with the .dic file, from a corpus of text, using this tool

The generate_corpus.py script in SpeechRecognition/asr/resources sifts through the resource files from robocup’s GPSRCmdGenerator and creates a corpus. The .dic and .lm files are generated from it by using the above tool.

Finally, the .gram file specifies the grammer to be imposed. For instance, if the commands we are expecting are always an action followed by an object or person and then a location, it might look like:

public <rule> = <actions> [<objects>] [<names>] [<locations>];

<actions> = MOVE | STOP | GET | GIVE

<objects> = BOWL | GLASS

<names> = JOE | JOEBOB

<locations> = KITCHEN | BEDROOM

Handle Segmentation

You can find this repository here

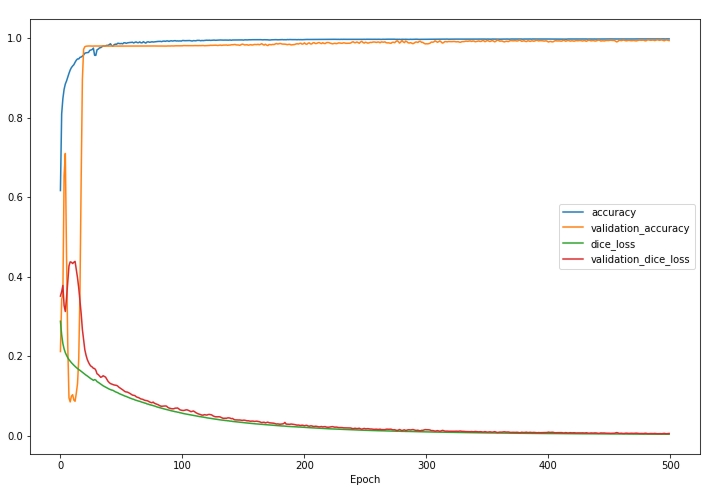

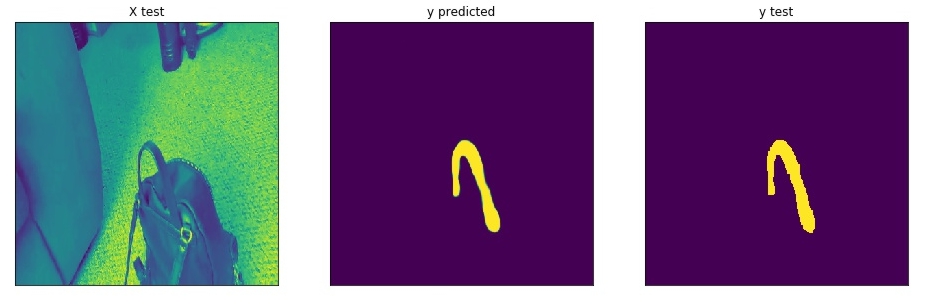

To be able to accurately pick up a bag, the robot must be able to detect where its handle is, as well as some information on how wide it is. To accomplish this, I trained a UNet model to segment images of handles.

UNet models are models that take as input an image and output a mask defining a region of interest. Producing data for these models requires labelling regions of interest on a variety of images. For that purpose I used two tools - LableMe or in MakeSense.ai.

After training, model inference on the test set was promising.

Additionally, some processing was done on the mask to obtain candidates for the apex of the handle, and its width. This allowed the model to output where the arm should grasp, like the sequence below. Additional work will be done to integrate the RGBD depth layer to obtain a depth location of the handle.

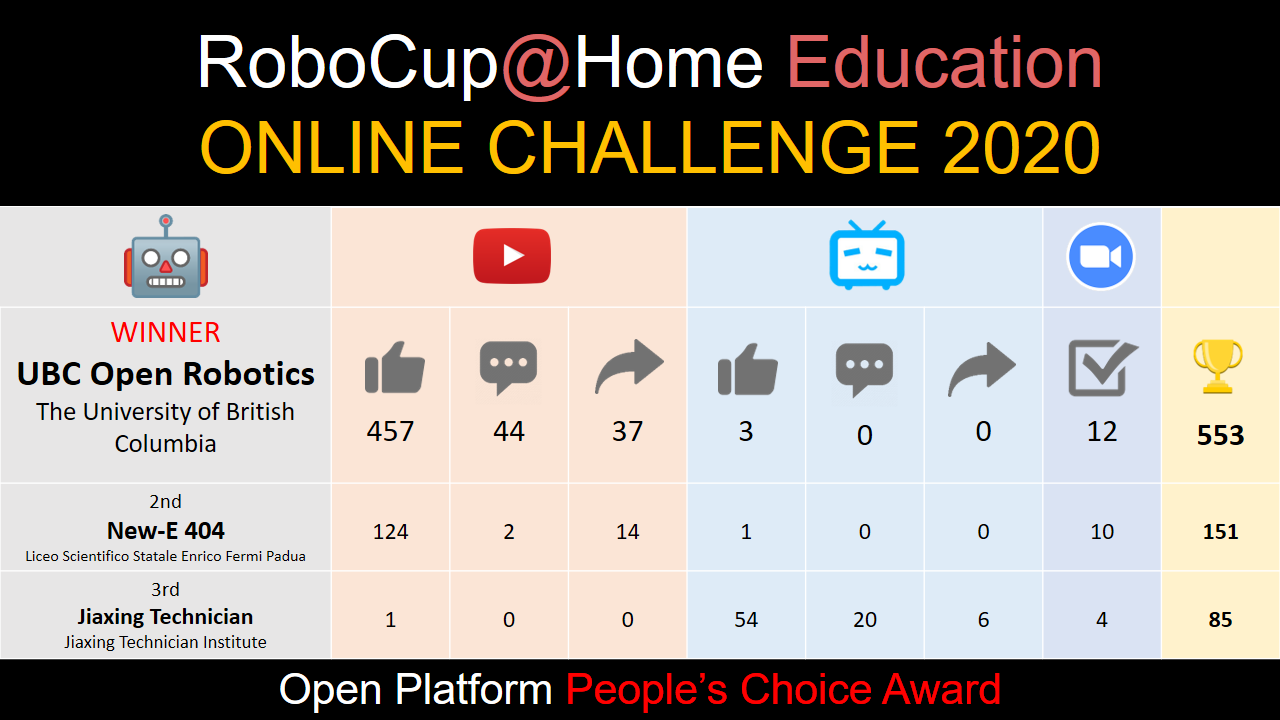

2020 RoboCup@Home Education Online Challenge

We (the software subteam) participated in the 2020 Online Challenge since it is the team’s goal to develop our own hardware platform for 2021. Meanwhile, we put our software progress to the test on the Turtlebot2 platform.

Out of 8 finalists, we ended up in second place in the open category (meaning open hardware category), and first place in people’s choice.